Instructional Technology- Domain of

Evaluation

Development Competency Artifacts

Evaluation, the domain that concerns itself with determining the adequacy of instruction, is prevalent in many areas of the Instructional Design process (AECT, 2001). In essence evaluation refers to many activities in the instructional systems design process where information or feedback is obtained in order to maximize the effectiveness of instruction. Instructional technologists utilize many evaluation tools such as observation, surveys, interviews, testing, data, etc. depending on the specific situation and informational needs.

Evaluation involves utilization of these techniques throughout the ISD process, including:

Needs analysis or assessment is defined as “the systematic study of a problem or

innovation, incorporating data and opinions from varied sources, in order to

make effective decisions or recommendations about what should happen next”

(Rossett, 1987, p.3). Needs analysis

refers to the initial stage of any performance or instructional project (front

end) whereby instructional technologists seek to answer the following questions:

· Is instruction needed?

· If so, what learning or performance is needed under what conditions?

· What are the learner’s preferences, learning styles, and environmental factors which will impact learning?

Rossetts model describes a sequence of information gathering utilizing a variety of techniques and tools aimed at quantifying and qualifying:

Actual performance- What is happening now?

Optimal performance- What should be happening?

(Difference between actual and optimal is referred to as the performance gap)

Attitudes- What do people feel about this situation?

Causes- What are perceived causes of the performance gap?

Solutions-What are recommended solutions?

Instructional or performance technologists should gather and utilize all information necessary to identify the root cause of the performance gap.

Criterion-Referenced Measurement

“Criterion-referenced measurement

involves techniques for determining learner mastery of pre-specified content”

(Seels & Richey, 1994, p. 56). The individual's performance is measured

against a standard or criteria rather than against performance of others who

take the same test. Criterion-referenced tests can be contrasted with

norm-referenced tests that are used to rank students and judge performance in

relation to the performance of others. According to Dick and Cary (2001) there

are four types of criterion referenced tests in the ISD process:

· Entry behavior tests- Do learners possess the required prerequisite skills?

· Pre instruction tests- What particular skills have learners previously mastered?

· Practice tests- Are learners acquiring intended skills as they progress?

· Post instruction tests- Did learners achieve the intended performance objective?

Formative evaluation is the gathering of information on the adequacy of instruction, and then using that information as a basis for further development (Seels and Glasgow, 1998). It refers to the trial- feedback- revision cycle utilized to maximize effectiveness of the instructional materials and methods during the design and development phases of instructional systems design. Dick and Cary (The Systematic Design of Instruction, 2001) recommend a scale up process involving formative evaluation of materials and delivery at three levels:

- Individual learners (minimal cost and time): One to one trials help verify designer’s assumptions and provide feedback as instructional clarity, impact, and feasibility.

- Small group (3-10 learners :) Small group trials determine effectiveness of post one to one revisions as well as identify any remaining issues.

- Larger scale field trials (20-40 learners): Field trials test post small group revisions and also test the instruction within the context for which it was intended.

For each step in the formative evaluation, data is collected relative learning objectives, as well as learner’s reactions and attitudes. Revisions are then made to instructional materials, processes and delivery. Utilizing this process provides efficiencies in that gross inadequacies will be identified early on at the individual learner level with minimal use of time and resources. As the evaluation proceeds to a small group and subsequently larger scale, designers and developers can fine tune the effectiveness from feedback gained in those settings as well as make any changes related to group dynamics and field context.

Contrary to formative evaluation which is an “in process” step, summative evaluation is a post instruction step which seeks to answer the question:

· Did the instruction meet the desired performance goals outlined in the needs analysis?

The up front needs analysis includes the desired performance goals and the manner in which they will be measured. Summative evaluation is the process of conducting those measurements, analyzing results and reporting outcomes. Various methods can be utilized by instructional technologists or by independent subject matter experts where independent opinions are needed.

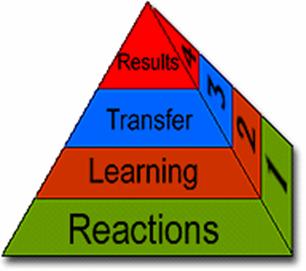

Donald Kirkpatrick in 1994 proposed his theory of 4 levels of evaluation for training which serves as a framework for practitioners to use in formative and summative evaluations.

(Chart adopted from Kirkpatrick, D.L. (1994). Evaluating Training Programs: The Four Levels.

Level 1 Reactions: Evaluation at this level measures learners’ perception of utility and usefulness.

· Was it user friendly?

· Was it relevant?

· Utilized in formative and summative evaluations.

Level 2 Learning: Formalized assessment of changes in learner’s skills or knowledge.

- Pre and post instruction measurement

- Criterion referenced assessment

- Utilized in formative and summative evaluations.

Level 3 Transfer: Measure learners’ utilization of enhanced skills/knowledge in everyday environment (academic, professional, etc.)

- Direct indicator of instructional benefits.

- Evaluation difficult- how and how often to measure?

Level 4 Results: Measurement of impact to the organization of instruction.

- What organizational or individual performance measures should be impacted by training?

- Utilized for cost / benefit analysis of instruction.

- Indicators may be indirect and / or take time to evidence any impact of instruction.

Demonstration of Evaluation Competencies in linked artifacts

Viewing of pdf pages requires Adobe Reader. Click here to download

|

Domain of Evaluation

|

||

|

Instructional

Technology Competencies |

Artifact:- Section |

Rational for

Inclusion |

|

Plan and conduct needs assessment. |

MIT 502 Analysis

and Recommended Strategies for Reduction of Employee Turnover: Performance gap analysis

MIT 542 Situation findings (pdf) MIT 513 Computer Based Instruction for Seizure

Management: Needs analysis (pdf)

|

All of these products relied significantly on utilization of training needs assessment skills following Rossett (1999) model in order to quantify and qualify the problem, identify root cause(s) and ultimately justify a need for an instructional based solution if any |

|

Plan and conduct evaluation of instruction/training |

MIT 500

Self Instruction Module for Nursing Competency: Performance

objectives and assessment MIT 513 Computer Based Instruction for

Seizure Management:

Design

|

Formal and informal formative evaluation was conducted in the process of developing these instructional modules. Both one to one and small group evaluations were utilized to refine instructional effectiveness and to test environmental issues. |

|

Plan and conduct summative evaluation of instruction/training |

MIT 500

Self Instruction Module for Nursing Competency:

Module evaluation |

A summative post instructional evaluation of training was conducted with learners to test learning, transfer, and reaction. |