|

The Field of IT ~ Domain of Evaluation

Problem Analysis

~ Definition of IT

~ Domain of Design |

||||||||||||||||||||||||

|

Table 1: Types of Evaluation. From: The Joint Committee Standards for Educational Evaluation (1981).

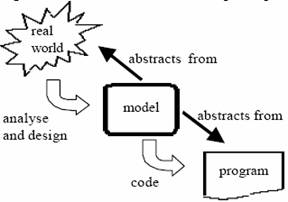

To guide the evaluation process, instructional designers often use an evaluation model. One comprehensive evaluation model that is used by instructional designers is CIPP model proposed by D. L. Stufflebeam’s (1969). The CIPP model is a guide for program, project, personnel, institution and systems evaluations. This evaluation model suggested four types of evaluation: Context, Input, Process, and Product (CIPP). Context evaluations determine the effectiveness and appropriateness of the program; Input evaluations determine the feasibility and accountability of the strategies chosen for the project; Process evaluations determine the maintenance of the document and the accessibility of the activities within the program; Product evaluations determine the program’s success over time. Anthony Finkelstein introduces the Objective Oriented model (2000). When using this model, an instructional designer compares the results of learning with the established goal(s). Figure 1 is a graphic representation of the Objective Oriented model.

Figure 1: The Development Process. Objective Oriented Model. Finkelstein (2000). An instructional designer places value and significance on the results of evaluation to determine if the learner is transferring the new knowledge. The following are the sub-domains of evaluation domain: problem analysis; criterion-referenced measurement; formative evaluation; and summative evaluation. Problem Analysis – is the preliminary step for evaluation of instruction development. An instructional designer will clarify learner goals and constraints during the Problem Analysis step by using information-gathering and decision-making strategies. The information-gathering defines a gap between ‘what is’ and ‘what should be’ in terms of results (Kaufman, 1972). Information-gathering is determined through a similar matrix as represented in Table 2: Table 2: Identifying the Gap Matrix. Kaufman (1972).

Once the instructional designer has determined through information gathering, the gap, the instructional designer will develop assessment instruments to measure the mastery of the learning. The instruments are based on specific criterion. Criterion-Referenced Measurement – involves techniques for determining learner mastery of pre-specified content. The criterion for determining adequacy of learner mastery is the extent to which the learner has met the objective and the attitudes or skills relative to the objective according to Linn and Miller (2005). The instructional designer uses criterion-referenced measurements to determine how well learners performed relative to a standard. According to Seels & Richey (1994) “Criterion-Referenced Post-Measurement determines whether major objectives have been met,” (p. 57). The Table 3 matrix is a practical means an instructional designer uses to tally and measure whether or not the objectives have been met: Table 3: Matrix for Measuring Objectives

Once the matrix information is gathered an instructional designer involves evaluations through means of one-on-one, small group, and field trials in a process known as formative evaluation. It is during the design and development stages of a project, the instructional designer conducts a formative evaluation. At the end of the project, the instructional designer schedules a summative evaluation for the organization to decide if the instruction should be adopted. The instructional designer usually does not conduct the summative decision for adoption due to bias opinion. Formative Evaluation – involves gathering information on adequacy and using the information as a basis for further development. According to Michael Scriven (1967) the distinction between Formative Evaluation and Summative Evaluation is as recorded in Table 4: Table 4: Formative Evaluation Description

Instructional designers rely on formative evaluations for a review of content through observations, interviews, one-to-one, and small-group strategies. A designer and subject matter experts will make revisions to the materials before presenting the materials for large-group tryouts. Formative evaluations utilize both quantitative and qualitative measures and one example of measuring might include an SPSS analysis. Quantitative evaluations measure the objectives whereas qualitative evaluations measure the subjective and experiential aspect of the project. Summative Evaluation – involves gathering information on adequacy and using this information to make decisions about utilization. According to Michael Scriven (1967) the definition for Summative Evaluation is provided in Table 5: Table 5: Summative Evaluation Description

Summative evaluation often uses group comparison study in a quasi-experimental design. According to Seels & Richey (1994), “when a product finally goes to market and is evaluated by an outside agency, which plays a consumer reports role, the purpose of the evaluation is clearly summative –i.e., helping buyers make a wise selection of a product” (p. 58). Summative evaluations require a balance between quantitative and qualitative measures. An instructional designer utilizes both quantitative and qualitative measures of the summative evaluation to analyze if: the material met the original objectives; what is the impact of the instruction; and was the problem solved? |

||||||||||||||||||||||||